Role of Embedded System and its future in industrial automation

Embedded systems have become increasingly important in today’s world of automation. Particularly in the field of industrial automation, embedded systems play an important role in speeding up production and controlling factory systems. In recent years, embedded systems have emerged as an essential component of various industries which is revolutionizing the automation of industrial processes. As a result of the integration of these systems into devices and machinery, manufacturing processes are streamlined, performance is enhanced, and efficiency is optimized. It is predicted in a survey report by market.us that the global embedded system market size will reach USD 173.4 billion by 2032, growing at a CAGR of 6.8% over the forecast period of 2023 to 2032. There is a growing demand for smart electronic devices, the Internet of Things (IoT), and automation across a number of industries that are driving this growth. Embedded systems in industrial automation will be discussed in this article, as well as some promising prospects.

What is the role of embedded systems and why are they essential in industrial automation?

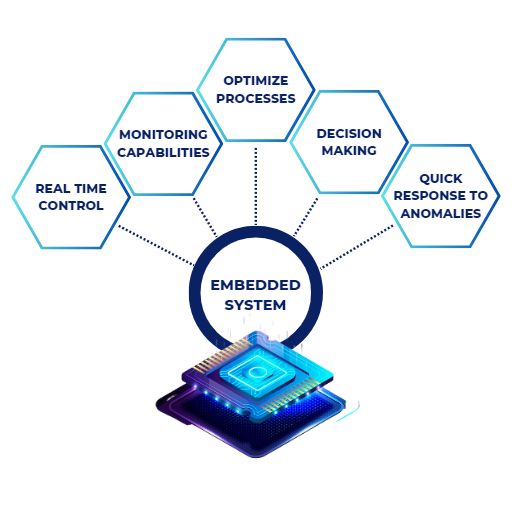

A wide range of benefits and capabilities are provided by embedded systems based applications in the industry. As a result of its real-time control and monitoring capabilities, industries can optimize processes, make informed decisions, and respond swiftly to anomalies as they arise. The productivity and efficiency of embedded systems are improved by automating repetitive tasks and streamlining processes. The embedded system facilitates proactive monitoring, early hazard detection, and effective risk management with a focus on safety and risk management with their incorporation with cutting-edge technologies like AI, ML, and IoT. It also creates new opportunities for advanced analytics, proactive maintenance, and autonomous decision-making.

Roles of embedded system in industrial automation

Overall, the embedded system is an indispensable component of industrial automation, driving innovation, and enabling businesses to thrive in a dynamic and competitive landscape.

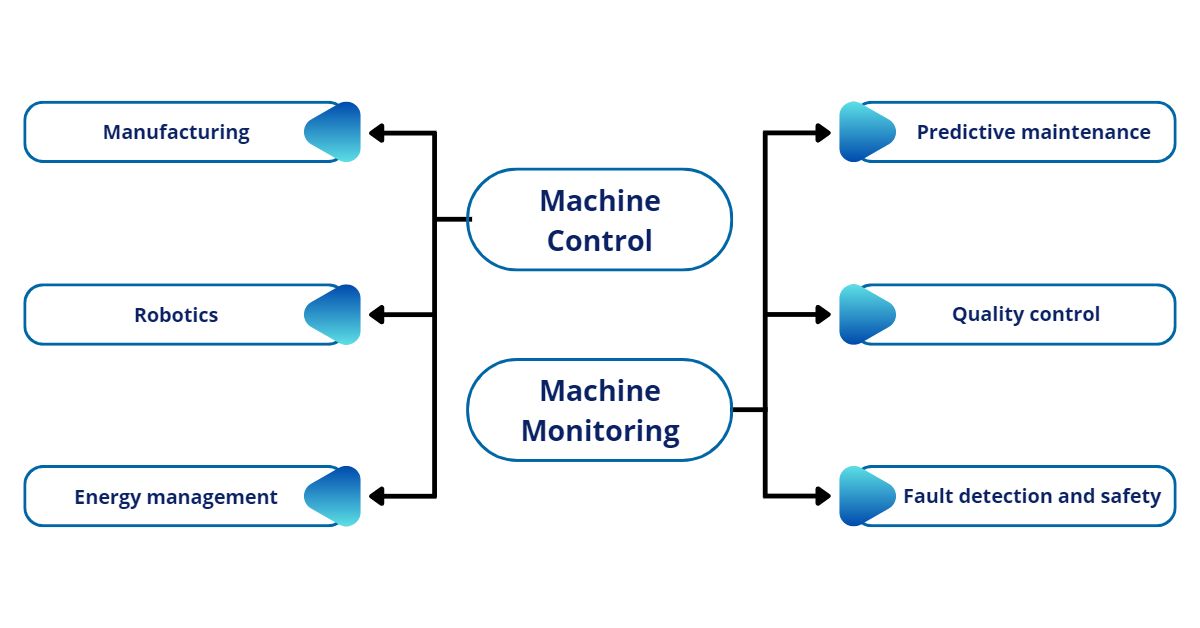

The industrial automation embedded system is divided into 2 main categories.

1. Machine control: Providing control over various equipment and processes is one of the main uses of embedded systems in industrial automation. With embedded systems serving as the central control point, manufacturing equipment, sensors, and devices can be precisely controlled and coordinated. To control the operation of motors, valves, actuators, and other components, these systems receive input from sensors, process the data, and produce output signals. Embedded systems make it possible for industrial processes to be carried out precisely and efficiently by managing and optimising the control systems. Let’s look at a few machine control use cases.

Manufacturing: Embedded systems are extensively used in manufacturing processes for machine control. They regulate and coordinate the operation of machinery, such as assembly lines, CNC machines, and industrial robots. Embedded systems ensure precise control over factors like speed, position, timing, and synchronization, enabling efficient and accurate production.

Robotics: Embedded systems play a critical role in controlling robotic systems. They govern the movements, actions, and interactions of robots in industries such as automotive manufacturing, warehouse logistics, and healthcare. Embedded systems enable robots to perform tasks like pick and place, welding, packaging, and inspection with high precision and reliability.

Energy management: Embedded systems are employed in energy management systems to monitor and control energy usage in industrial facilities. They regulate power distribution, manage energy consumption, and optimize energy usage based on demand and efficiency. Embedded systems enable businesses to track and analyze energy data, identify energy-saving opportunities, and implement energy conservation measures. These systems continuously monitor various energy consumption parameters, such as power usage, equipment efficiency, and operational patterns. By analysing the collected data, embedded systems can detect patterns and trends that indicate potential energy-saving opportunities.For example, they can identify instances of excessive energy consumption during specific periods or equipment malfunctions that result in energy waste. These insights enable businesses to optimize energy usage and reduce waste.

Classes of embedded system in industrial automation

2. Machine monitoring: Embedded systems are also utilized for monitoring in industrial automation. They are equipped with sensors and interfaces that enable the collection of real-time data from different points within the production environment. This data can include information about temperature, pressure, humidity, vibration, and other relevant parameters. Embedded systems process and analyze this data using machine learning and deep learning algorithms, providing valuable insights into the performance, status, and health of equipment and processes. By continuously monitoring critical variables, embedded systems facilitate predictive maintenance, early fault detection, and proactive decision-making, leading to improved reliability, reduced downtime, and enhanced operational efficiency. Some examples of machine monitoring:

Predictive maintenance: Intelligent embedded systems enable real-time monitoring of machine health and performance. By collecting data from sensors embedded within the machinery, these systems can analyze machine parameters such as temperature, vibration, and operating conditions. The collected data is utilized to identify irregularities and anticipate possible malfunctions, enabling proactive maintenance measures and minimizing unexpected downtime.

Quality control: Embedded systems in machine monitoring focus on product quality and consistency during manufacturing. They monitor variables such as pressure, speed, dimensions, and other relevant parameters to maintain consistent quality standards. For example, an embedded system may monitor pressure levels during the injection moulding process to ensure that the produced components meet the required specifications. If the pressure deviates from the acceptable range, the system can trigger an alarm or corrective action to rectify the issue. This will maintain the high standard of product quality.

Fault detection and safety: Machine monitoring systems detect potential faults or unsafe conditions in manufacturing environments. They continuously monitor machine performance and operating conditions to identify deviations from normal operating parameters. For instance, if an abnormal temperature rise is detected in a motor, indicating a potential fault or overheating, the embedded system can trigger an alarm or safety measure. This will prevent further damage or accidents. The focus here is on maintaining a safe working environment and protecting both equipment and personnel.

The Future of Embedded Systems in Industrial Automation

Embedded systems are poised to play a major role in industrial automation as automation demand continues to grow. These systems have the potential to improve efficiency, increase productivity, and drive innovation in industrial processes. Furthermore, the integration of embedded systems with emerging technologies like the Internet of Things (IoT) and Artificial Intelligence (AI) is expected to enhance their capabilities even further. Overall, embedded systems are essential for enabling businesses to thrive in the dynamic and competitive landscape of industrial automation.

Softnautics specializes in providing secure embedded systems, software development, and FPGA design services. We implement the best design practices and carefully select technology stacks to ensure optimal embedded solutions for our clients. Our platform engineering services include FPGA design, platform enablement, firmware and driver development, OS porting and bootloader optimization, middleware integration for embedded systems, and more. We have expertise across various platforms, allowing us to assist businesses in building next-generation systems, solutions, and products.

Read our success stories related to embedded system design to know more about our platform engineering services.

Contact us at business@softnautics.com for any queries related to your solution or for consultancy.

[elementor-template id=”13562″]

Role of Embedded System and its future in industrial automation Read More »