Applications and Opportunities for Video Analytics

In recent years, the development of video analytics based video solutions have come up as a high-end technology that has changed the way we interpret and analyze video data. Video analytics uses the most advanced algorithms and artificial intelligence (AI) to track the behaviour and understand the data in real-time, allowing to automate necessary actions. This technology has found many applications across different industries, providing valuable insights, intensifying security, improving the safety and optimizing operations. According to Verified Market Research group, video analytics is experiencing rapid market growth, with its global market value to reach USD 35.88 billion by 2030, representing a CAGR of 21.5% from its valuation of USD 5.65 billion in 2021. This growth trend highlights the increasing demand for video analytics solutions as organizations seek to enhance their security and surveillance systems.

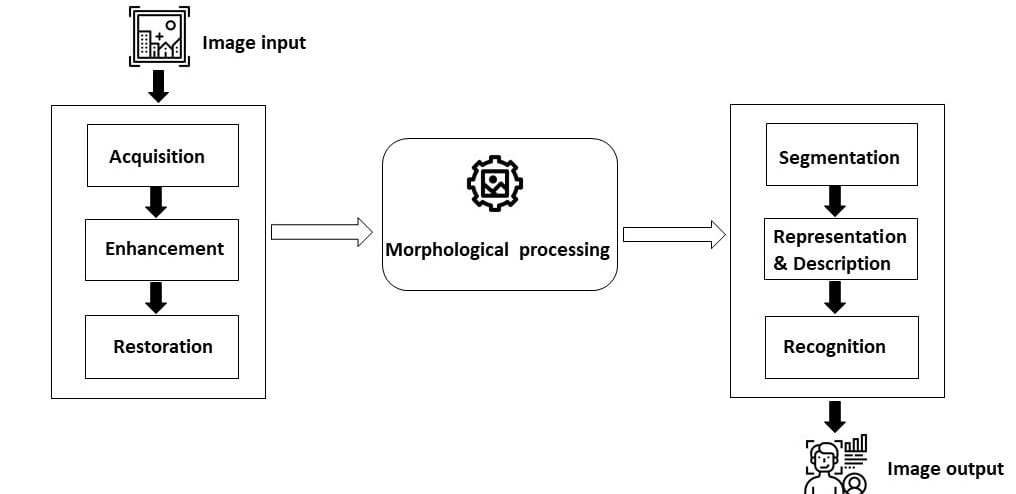

Video analytics is closely related to video processing which is an essential part of any multimedia solution, as it involves the extraction of meaningful insights and information from video data using various computational techniques. Video analytics leverages the capabilities of video processing to analyze and interpret video content, enabling computers to automatically detect, track, and understand objects, events, and patterns within video streams. Video processing techniques are used in a wide range of applications, including surveillance, video streaming, multimedia communication, autonomous vehicles, medical imaging, entertainment, virtual reality, and many more.

In this article, we will see some Industrial applications and use cases of video analytics in different areas.

Industrial Applications of Video Analytics

Automotive

One of the most used and inescapable uses of video analytics is in the automotive industry for Advanced Driver Assistance System (ADAS) in highly-automate vehicles (HAVs). The HAVs use multiple cameras to identify pedestrians, traffic signals, other vehicles, lanes, and other indicators, they are integrated with the ECU and programmed in such a way as to identify the real-time situation and then respond accordingly. Automating this process, requires integration of various system on a chip (SoC). These chipsets help actuators to connect with the sensors through interface and ECUs. It analyses the data with deep learning based machine learning (ML) models that uses neural networks to learn patterns in data. Neural networks are structured with layers of interconnected processing nodes, typically comprising multiple layers. This deep learning algorithms are used to detect and track objects in real-time videos, as well as to recognize specific actions.

Sports

In the sports industry, video analytics is being utilized by coaches, personal trainers, and professional athletes to optimize performance through data-driven insights. In sports such as rugby and soccer, tracking metrics like ball possession and the number of passes has become a standard practice for understanding game patterns and team performance. Detailed research on a soccer game has shown that analyzing ball possession can even impact the outcome of a match. Video analytics can be used to gain insights into the playing style, strategies, passing patterns, and weaknesses of the opponent team, enabling a better understanding of their gameplay.

Video Analytics Applications

Retail

Intelligent video analytics is a valuable tool for retailers to monitor storefront events and promptly respond to improve the customer experience. Real-time video is captured by cameras, which cover areas such as shelf inventory, curbside pickup, and cashier queues. On-site IoT Edge devices analyze the video data in real-time to detect key metrics, such as the number of people in checkout queues, empty shelf space, or cars in the parking lot.

Anomaly events can be avoided by metrics analysis, alerting store managers or stock supervisors to take corrective actions. Additionally, video clips or events can be stored in the cloud for long-term trend analysis, providing valuable insights for future decision-making.

Health Care

Video analytics has emerged as a transformative technology in the field of healthcare, offering significant benefits in patient care and operational efficiency. By utilizing cutting-edge machine learning algorithms and computer vision, these systems can analyze video data in real-time to automatically detect and interpret various diseases into human body. It can also be leveraged for patient monitoring, detecting emergencies, identifying wandering behaviour in dementia patients, and analyzing crowd behaviour in waiting areas. These capabilities enable healthcare providers to proactively address potential issues, optimize resource allocation, and enhance patient safety, leading to improved patient outcomes and a higher quality of care. With ongoing advancements in technology, video analytics is poised to play a crucial role in shaping the future of healthcare, making it more intelligent, efficient, and patient-centric.

To summarize, video analytics is a rapidly growing field that leverages various technologies such as computer vision, deep learning, image and video processing, motion detection and tracking, and data analysis to extract valuable insights. Video analytics has found applications in diverse domains, including security and surveillance, healthcare, automotive, sports, and others. By automating the analysis of video data, video analytics enables organizations to efficiently process large amounts of visual information, identify patterns and behaviours, and make data-driven decisions in more effective and less expensive.

With continuous advancements in technology, we at Softnautics help businesses across various industries to provide intelligent media solutions involving the simplest to the most complex multimedia technologies. We have hands-on experience in designing high-performance media applications, architect complete video pipelines, audio/video codecs engineering, applications porting, ML model design, optimize, test and deploy.

We hope you enjoyed this article and got a better understanding of how video analytics based intelligent solutions can be implemented for various businesses to automate processes, improve efficiency/accuracy, and take better decisions.

Read our success stories related to intelligent media solutions to know more about our multimedia engineering services.

Contact us at business@softnautics.com for any queries related to your solution or for consultancy.

[elementor-template id=”13562″]

Applications and Opportunities for Video Analytics Read More »