Optimizing Embedded software for real-time multimedia processing

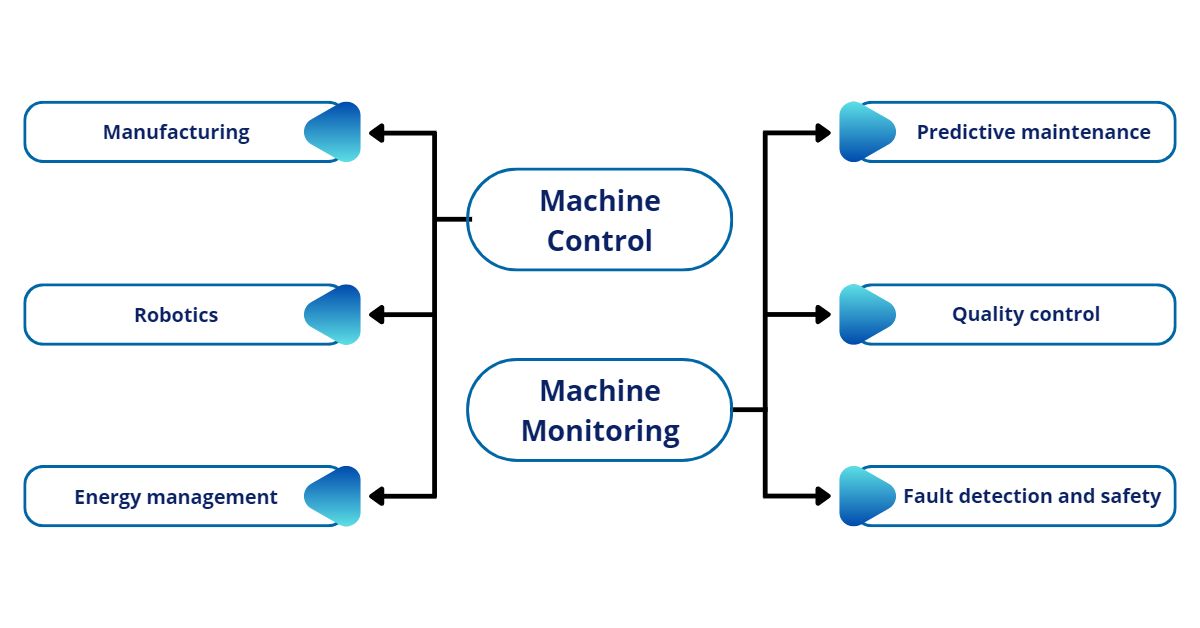

The demands of multimedia processing are diverse and ever-increasing. Modern consumers expect nothing less than immediate and high-quality audio and video experiences. Everyone wants their smart speakers to recognize their voice commands swiftly, their online meetings to be smooth, and their entertainment systems to deliver clear visuals and audio. Multimedia applications are now tasked with handling a variety of data types simultaneously, such as audio, video, and text, and ensuring that these data types interact seamlessly in real-time. This necessitates not only efficient algorithms but also an underlying embedded software infrastructure capable of rapid processing and resource optimization. The global embedded system market is expected to reach around USD 173.4 billion by 2032, with a 6.8% CAGR. Embedded systems, blending hardware and software, perform specific functions and find applications in various industries. The growth is fuelled by the rising demand for optimized embedded software solutions.

The demands on these systems are substantial, and they must perform without glitches. Media and entertainment consumers anticipate uninterrupted streaming of high-definition content, while the automotive sector relies on multimedia systems for navigation, infotainment, and in-cabin experiences. Gaming, consumer electronics, security, and surveillance are other domains where multimedia applications play important roles.

Understanding embedded software optimization

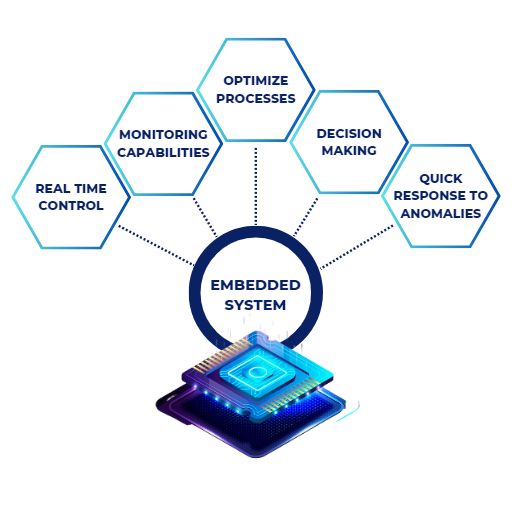

Embedded software optimization is the art of fine-tuning software to ensure that it operates at its peak efficiency, responding promptly to the user’s commands. In multimedia, this optimization is about enhancing the performance of software that drives audio solutions, video solutions, multimedia systems, infotainment, and more. Embedded software acts as the bridge between the user’s commands and the hardware that carries them out. It must manage memory, allocate resources wisely, and execute complex algorithms without delay. At its core, embedded software optimization is about making sure every bit of code is utilized optimally.

Performance enhancement techniques

To optimize embedded software for real-time multimedia processing, several performance enhancement techniques come into play. These techniques ensure the software operates smoothly and at the highest possible performance.

- Code optimization: Code optimization involves the meticulous refinement of software code to be more efficient. It involves using algorithms that minimize processing time, reduce resource consumption, and eliminate duplication.

- Parallel processing: Parallel processing is an invaluable technique that allows multiple tasks to be executed simultaneously. This significantly enhances the system’s ability to handle complex operations in real-time. For example, in a multimedia player, parallel processing can be used to simultaneously decode audio and video streams, ensuring that both are in sync for a seamless playback experience.

- Hardware acceleration: Hardware acceleration is a game-changer in multimedia processing. It involves assigning specific tasks, such as video encoding and decoding, to dedicated hardware components that are designed for specific functions. Hardware acceleration can dramatically enhance performance, particularly in tasks that involve intensive computation, such as video rendering and AI-based image recognition.

Memory management

Memory management is a critical aspect of optimizing embedded software for multimedia processing. Multimedia systems require quick access to data, and memory management ensures that data is stored and retrieved efficiently. Effective memory management can make the difference between a smooth, uninterrupted multimedia experience and a system prone to lags and buffering.

Efficient memory management involves several key strategies.

- Caching: Frequently used data is cached in memory for rapid access. This minimizes the need to fetch data from slower storage devices, reducing latency.

- Memory leak prevention: Memory leaks, where portions of memory are allocated but never released, can gradually consume system resources. Embedded software must be precisely designed to prevent memory leaks.

- Memory pools: Memory pools are like pre-booked sectors of memory space. Instead of dynamically allocating and deallocating memory as needed, memory pools reserve sectors of memory in advance. This proactive approach helps to minimize memory fragmentation and reduces the overhead associated with constantly managing memory on the fly.

Optimized embedded software for real-time multimedia processing

Real-time communication

Real-time communication is the essence of multimedia applications. Embedded software must facilitate immediate interactions between users and the system, ensuring that commands are executed without noticeable delay. This real-time capability is fundamental to providing an immersive multimedia experience.

In multimedia, real-time communication encompasses various functionalities. For example, video conferencing ensures that audio and video streams remain synchronized, preventing any awkward lags in communication. In gaming, it enables real-time rendering of complex 3D environments and instantaneous response to user input. The seamless integration of real-time communication within multimedia applications not only ensures immediate responsiveness but also underpins the foundation for an enriched and immersive user experience across diverse interactive platforms.

The future of embedded software in multimedia

The future of embedded software in multimedia systems promises even more advanced features. Embedded AI solutions are becoming increasingly integral to multimedia, enabling capabilities like voice recognition, content recommendation, and automated video analysis. As embedded software development in this domain continues to advance, it will need to meet the demands of emerging trends and evolving consumer expectations.

In conclusion, optimizing embedded software for real-time multimedia processing is a subtle and intricate challenge. It necessitates a deep comprehension of the demands of multimedia processing, unwavering dedication to software optimization, and the strategic deployment of performance enhancement techniques. This ensures that multimedia systems can consistently deliver seamless, immediate, and high-quality audio and video experiences. The embedded software remains the driving force behind the multimedia solutions that have seamlessly integrated into our daily lives.

At Softnautics, a MosChip company, we excel in optimizing embedded software for real-time multimedia processing. Our team of experts specializes in fine-tuning embedded systems & software to ensure peak efficiency, allowing seamless and instantaneous processing of audio, video, and diverse media types. With a focus on enhancing performance in multimedia applications, our services span across designing audio/video solutions, multimedia systems & devices, media infotainment systems, and more. Operating on various architectures and platforms, including multi-core ARM, DSP, GPUs, and FPGAs, our embedded software optimization stands as a crucial element in meeting the evolving demands of the multimedia industry.

Read our success stories to know more about our multimedia engineering services.

Contact us at business@softnautics.com for any queries related to your solution design or for consultancy.

[elementor-template id=”13534″]

Optimizing Embedded software for real-time multimedia processing Read More »